4th February 2016

This article describes a reverse geocoding experiment preformed on Swedish heritage data provided by the SOCH API. I’m using reverse geocoding and a bit of magic to be able to improve location-based search by being able to return items that isn’t georefrenced. This practice can be applied on almost any dataset.

A friend of mine pointed out that this could be achieved by selecting location names using a usual bounding box. The reason I decided to go with reverse geocoding(expect the fact that’s more fun) is that it’s more flexible, you can use it along a path or just with a single point without building some rubbish estimated bounding box.

SOCH contains about 1.6 million georefrenced objects, all accessible from a map, but if you are doing research on a location you will need more than just the georefrenced objects. You will probably end up making a few searches(both free text and location text) on multiply place names in addition to your bounding box search.

All those searches can be automated from the bounding box search.

For my setup I’m using the open source geocoder Pelias, with place name data from OpenStreetMap(pipeline) and Geonames(pipeline)(adding your own data is not a major task either(pelias-model)).

You can use any geocoder with useful data(such as Googles or Mapzens public APIs) but the results will differ.

I will make I urban and one non urban search, the non urban search will happen around Flodafors in Sörmland Sweden(59.067,16.359) near the known church Floda kyrka, the urban search will happen around the street Vallgatan in Nyköping Sweden(58.74,17.01) next to the former castle Nyköpingshus.

I have set the geocoder to always give me the ten closest place names, those will then be used in the actual text based searches.

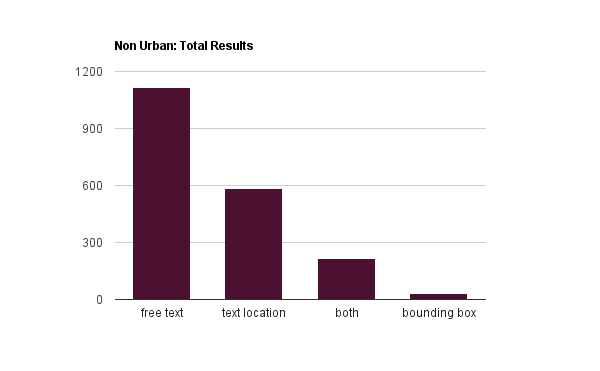

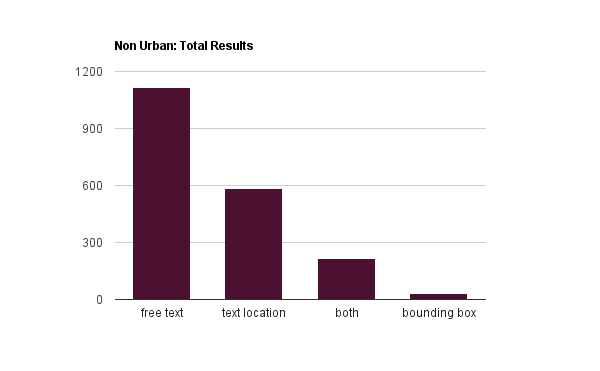

The non urban text based searches resulted in a total of 1724 unique results, the free-text searches resulted in 1330 results, the text location ones 808 results. 214 results was retrieved both from the free-text searches and the text-location ones.

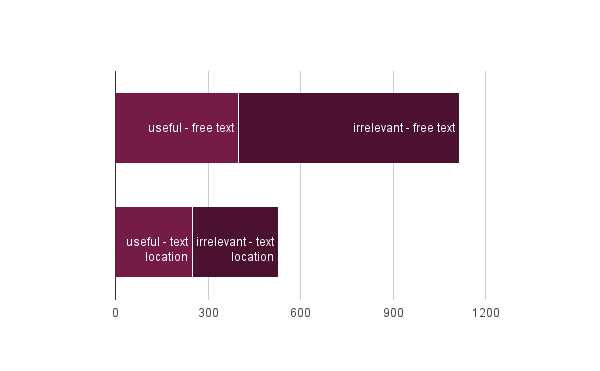

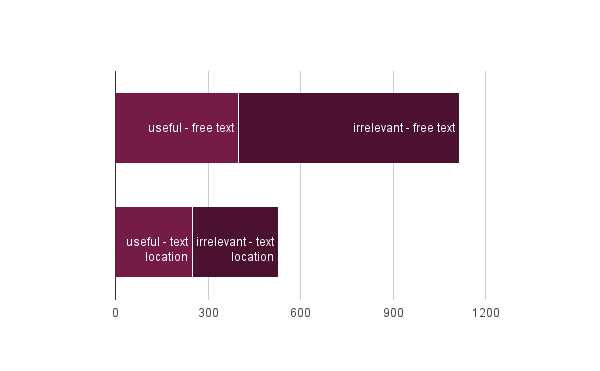

Only about 40% of the unique results was relevant for the requested location, I expected the useful results to be even fewer so even if 40% seams like a bad result it’s actually a good one. It’s quite easy to sort out a lot of irrelevant result by searching the objects content for other regions names. If one object contains the name of any region other then the one where the location is located it can be removed from the final results.

The bounding box for a area covering all the places with the used names returned 32 results.

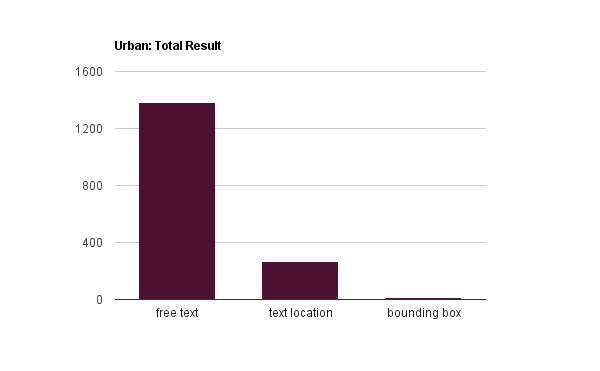

The urban area did cause some trouble, as about half of the place names used ended p being useless store names. One of the used place names was the name of the local museum, Sörmlands Museum even more sadly this did not cause any bad results because this is one museum witch has its collections content behind pay-walls and not available through the SOCH API.

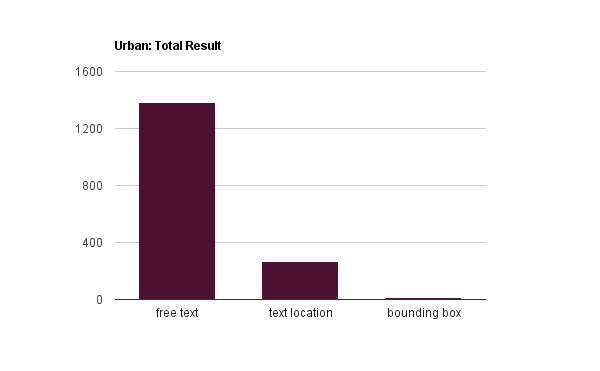

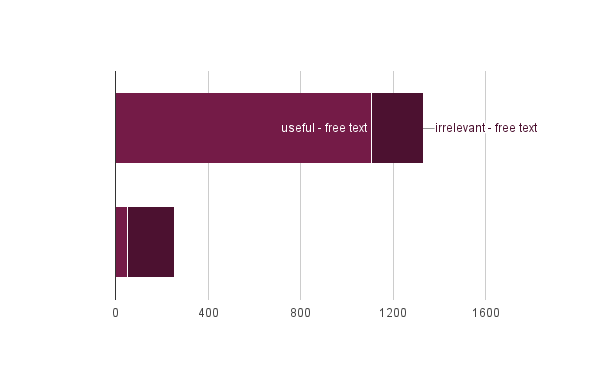

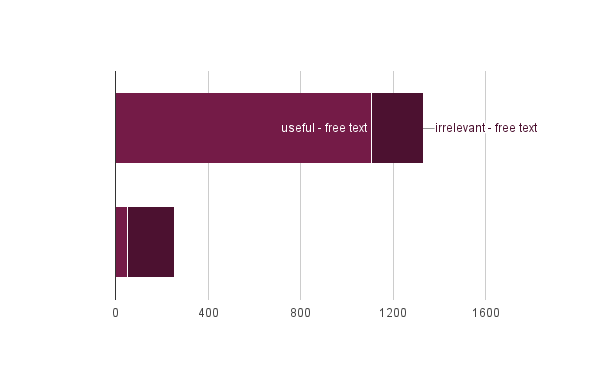

There where 1640 results unique this time, almost 100 results less then for the non-urban example, this is because store names and boring museums do not provide any results. The text-location searches returned 256 results, only about 20% was useful in this case, mostly because of the fuzzy search behind SOCH(one of the stores contained the name of a church in some foreign area). The free-text searches returned 1384 object in witch about 80% was useful! I came to the conclusion that this was because the name of the former castle Nyköpingshus provided many results and did not share the name with any other location(witch was a issue in the non-urban location).

The relevant bounding box for the urban area returned 13 results.

It should be noted that the difference in results between the non-urban and the urban area is affected by the fact that the area covered is much larger for the non-urban area as a result of the density of place names.

Although using a reverse geocoder often gave more irrelevant results than relevant ones this is definitely an improved way for searching, manual searches would always give the same irrelevance and much of the irrelevant objects can be sorted out with simple techniques. Using this and a few other tricks has really help me when I have been looking of content deep into the oceans of data.

I should also note that each location query ended up with eleven HTTP requests to the Kringla.nu MediaRSS interface(cheaty way of using the SOCH API), but if such a search feature would be added to the actual API it would not be super heavy(at least not for you, the client).

I’m heading to Helsinki tomorrow for Hack4FI - hack your heritage, as a part of Wikimedia Finlands Wikidata project, and you should defiantly catch me if you want to chat about linked data or something else!

26th January 2016

This is the second part out of two introducing the KSamsok-PHP API library, the first part is KSamsök-PHP: the Basics.

Extending

When KSamsök-PHP does not have a method for a request you want to preform against the K-Samsök API extending KSamsök-PHP might be the solution. Extending KSamsök-PHP allows you to use KSamsök-PHP functions for formating URIs, validate responds, parsing and more, together with your own custom requests.

Extending the kSamsok class gives your new child class access to a set of protected methods useful to you.

customKSamsok extends kSamsok {

// your custom methods

}

In the following example we will write a method for retrieving the first 250 photos of the media type image/jpeg, that has thumbnails based on a text-based search string. Note that KSamsök-PHP can only parse the presentation format so make sure you always append &recordSchema=presentation.

Preparing the URL

The prepareUrl() deals with encoding/whitespace in your URL and make sure it’s valid, always use it! Note that we access the API key with $this->key and the endpoint with $this->url.

customKSamok extends kSamsok {

public function photoSearch($text) {

$url = $this->url . 'x-api=' . $this->key . '&method=search&hitsPerPage=250&query=itemType="Foto"%20and%20text="' . $text . '"%20and%20mediaType="image/jpeg"%20and%20thumbnailExists=j&recordSchema=presentation'; // build the URL

$url = $this->prepareUrl($url); // Fix possible encoding/whitespace issues

}

}

Validating the Response

validResponse() validates a response, returning false if the resource is inaccessible or broken.

customKSamok extends kSamsok {

public function photoSearch($text) {

$url = $this->url . 'x-api=' . $this->key . '&method=search&hitsPerPage=250&query=itemType="Foto"%20and%20text="' . $text . '"%20and%20mediaType="image/jpeg"%20and%20thumbnailExists=j&recordSchema=presentation'; // build the URL

$url = $this->prepareUrl($url); // Fix possible encoding/whitespace issues

if (!$this->validResponse($urlQuery)) { // return false if record is inaccessible

return false;

}

}

}

Parsing the Results

KSamsök-PHP parses each item(record) one by one as a SimpleXMLElement object using its parseRecord() method. Note the use of killXmlNamespace().

customKSamok extends kSamsok {

public function photoSearch($text) {

$url = $this->url . 'x-api=' . $this->key . '&method=search&hitsPerPage=250&query=itemType="Foto"%20and%20text="' . $text . '"%20and%20mediaType="image/jpeg"%20and%20thumbnailExists=j&recordSchema=presentation'; // build the URL

$url = $this->prepareUrl($url); // Fix possible encoding/withespace issues

if (!$this->validResponse($urlQuery)) { // return false if recorce is inaccessible

return false;

}

$xml = file_get_contents($url); // get the resource contents

$xml = $this->killXmlNamespace($xml); // bypass XML-Namespaces for the SimpleXMLElement class

$xml = new SimpleXMLElement($xml); // create the SimpleXMLElement object

$result[] = array(); // array container for the parsed items

foreach ($xml->records->record as $item) { // loop through all the items

$result[] = $this->parseRecord($item); // push parsed item to result array

}

return $result;

}

}

Usage

Using your new method is now as easy as using any of the basic ones:

$kSamsok = new customKSamok('test');

$searchResultArray = $kSamsok->photoSearch('kyrka');

Now have fun and build something, checkout the Documentation and report any evil bugs at Github.

Previous posts about K-Samsök and heritage data:

25th January 2016

K-Samsök also known as SOCH is an aggregator and API for Swedish culture heritage institutions, it’s developed by the Swedish National Heritage Board and in the time of writing it has 6,066,262 items indexed.

- 2,660,852 items has images

- 1,612,261 items has coordinates

- 628,582 media items has a public domain like license

- 2,929 items are maps

- and there is tons of linked data in there

Because content APIs that default to RDF sucks(I love linked data but still), messy URIs sucks and because K-Samsök got amazing content I wrote this API library.

Ksamsök-PHP links:

Installing

Installing K-Samsök PHP requries the Composer package manager:

composer require abbe98/ksamsok-php

Initialize

K-Samsök requires an API key, for messing around you can use test. Create a new instance of the KSamsok class and provide your API key:

$kSamsok = new KSamsok('test');

Making a Simple Text Search

Making a text based search is done using the the search method:

$kSamsok = new KSamsok('test');

$searchResultArray = $kSamsok->search('kanon', 1, 60);

Oh, parameters! The first one('kanon') is the string to search for, the second parameter defines where in the results you want to start retrieving items for example 1 means you want to start from the beginning of the search results. The last parameter in the example above tells the API how many items you want to retrieve(1-500 is valid).

The following example would result in a search for the string kyrka starting at result 60 retrieving 60 results:

$kSamsok = new KSamsok('test');

$searchResultArray = $kSamsok->search('kyrka', 60, 60);

There is an optional parameter too:

$kSamsok = new KSamsok('test');

$searchResultArray = $kSamsok->search('kyrka', 60, 60, true);

This would simply make the something as the last example but only request items whit images, neat isn’t it?

Okay, that’s a basic text based search, lets move on.

Autocompleting Search

K-Samsök has a simple method for auto completing and it’s super simple to use with KSamsök-PHP:

$kSamsok = new KSamsok('test');

$searchHintObject = $kSamsok->searchHint('ka', 3);

The first searchHint() parameter is the string to get auto completing suggestions from, the second parameter is optional and sets the number of suggestions to retrieve the default value is 5. So now you can actually create a basic search engine.

Searching within a Bounding Box

Now lets check out the KSamsök-PHP method for making bounding box searches:

$west = '16.41';

$south = '59.07';

$east = '16.42';

$north = '59.08';

$kSamsok = new KSamsok('test');

$bBoxResultsArray = $kSamsok->geoSearch($west, $south, $east, $north, 300, 500);

The parameters should be quite straight forward the four first parameters define the lat/lon borders of the bounding box. The first integer parameter does define where in the results to start retrieving items the last parameter define how many results to retrieve(1-500 is valid).

The remaining public methods of KSamsök-PHP uses URIs as the main parameter so first let me introduce you to URIs in K-Samsök. Lets take a look at the following URI: raa/kmb/16000300020896 This is the most “raw” URI you can get in K-Samsök. First the data provider is defined and then there is a regular ID.

To retrieve the XML source of this item(from the API) you would guess there would be some parameter or extention such as raa/kmb/16000300020896.xml or raa/kmb/16000300020896?format=xml. This is actullay how it’s done: raa/kmb/xml/16000300020896.

This results in the fact that items URIs and URLs is somewhat diverse:

raa/kmb/16000300020896raa/kmb/xml/16000300020896raa/kmb/rdf/16000300020896raa/kmb/html/16000300020896http://kulturarvsdata.se/raa/kmb/16000300020896http://kulturarvsdata.se/raa/kmb/xml/16000300020896http://kulturarvsdata.se/raa/kmb/rdf/16000300020896http://kulturarvsdata.se/raa/kmb/html/16000300020896

Yep that’s the same item. In KSamsök-PHP it does not matter what URI or URL you provides it handles it for you out of the box!

Getting a Single Item

Lets return a single item based on its URI or URL:

$kSamsok = new KSamsok('test');

$itemObject = $kSamsok->object('http://kulturarvsdata.se/shm/site/xml/18797');

The following example would return the same result:

$kSamsok = new KSamsok('test');

$itemObject = $kSamsok->object('shm/site/18797');

Getting Items Relations

Returning all relations for a item is just as neat:

$kSamsok = new KSamsok('test');

$relationsObject = $kSamsok->relations('shm/site/18797');

Now we only have one public method left and it’s uriFormat(). uritFormat() convert K-Samsök URIs and URLs to any URI/URL type and even validate them:

$kSamsok = new KSamsok('test');

$uriString = $kSamsok->uriFormat('shm/site/18797', 'xmlurl');

This would result in the string http://kulturarvsdata.se/shm/site/xml/18797 being returned. The first parameter is simply the item URI or URL. The second parameter sets the type of URI or URL to be returned(see the documentation). By setting a third optional parameter to true you can trigger validation of the URI(returns false if invalid).

That’s the basic usage of KSamsök-PHP in part two we will extend its features using its protected methods to be able to take advantage of all K-Samsöks powerful native methods.

Previous posts about K-Samsök and heritage data:

10th January 2016

This winter holiday is coming to a close. I have not only been inaccessible in some mountains cross-country skiing all days, although most people seams to believe so. This holiday I actually had two dedicated “holiday projects”, a holiday project is simply projects that goes from idea to launched in a single holiday.

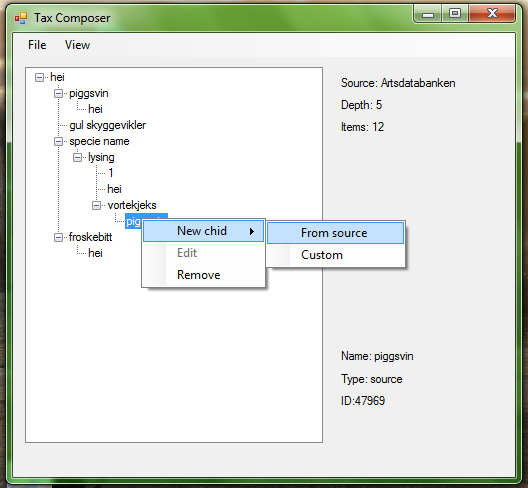

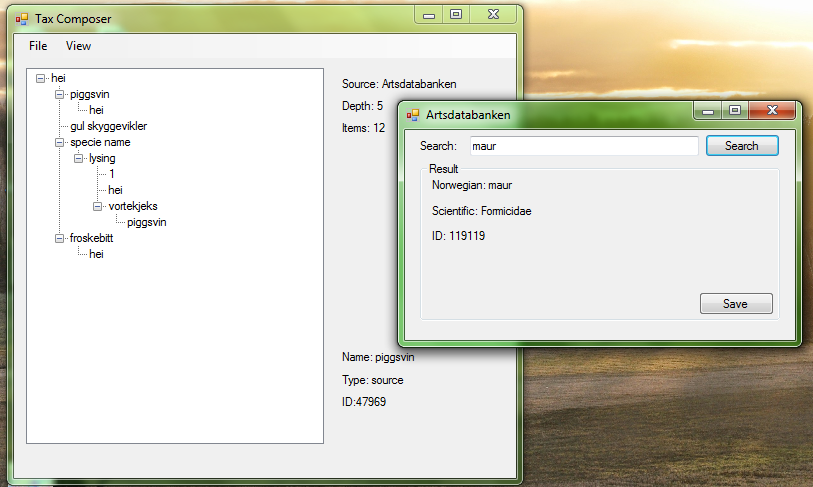

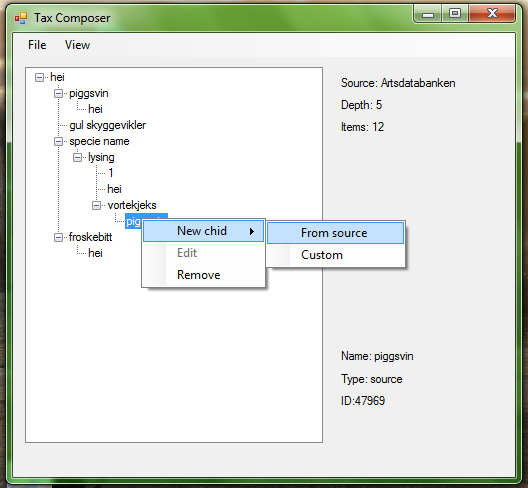

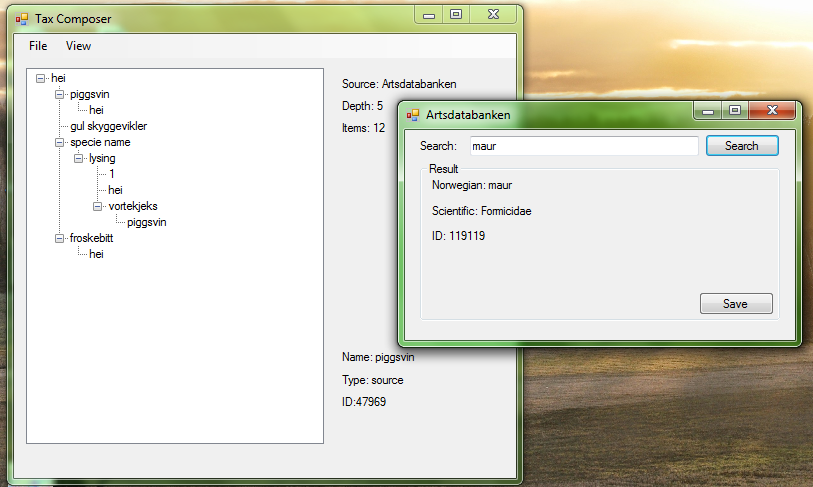

Tax Composer comes because of a need for creating custom taxonomy(specie hierarchies) schemes for the Biocaching project. Custom schemes allows us to display different taxonomies to for example school classes and bird lovers without touching the scientific one in our database.

Tax Composer is simply a Windows desktop application for building(composing) taxonomy XML schemes from external sources. Currently it supports sources such as the Encyclopedia of Life and the Norwegian Artsdatabanken.

Last time I wrote C# I think I was about 10 years old and I was defiantly running Windows XP, when I started this project I thought about Visual Studio as a bloated, overkill piece of expensive software and C# as a not so flexible, documented, open. Also I had “a bit of a issue” with Visual Studio editing my files without me knowing about it.

Now?

Visual Studio and C# is quite awesome. First of I ditched that expensive Visual Studio Ultimate edition(Enterprise nowadays?) as I had access to. The free Community edition plus a Git(hub) plug in was all I needed. Visual Studio powerful it got awesome debugging features and its auto completing of my code is super useful. C# is still a static typed language but I has no issues with that, and that behind the scene editing is great.

The only thing I actually have been having a few issues with is Windows Forms, it’s great for building basic interfaces fast but it’s the “only thing” keeping Tax Composer from being cross platform and isn’t responsive. I knew I could use the Windows Presentation Framework instead but it lacks god basic getting started resources(Windows Forms don’t even need that) and it would have delayed my actual coding.

The Tax Composer project was almost the perfect project for getting started with C# for desktop applications again, it included:

- basic input validation

- saving/editing files

- HTTP requests

- XML parsing

- Custom data types

A few things such as statics and basic graphics could be nice to know too, but this is a good start.

If have found any reason why you would need to build custom taxonomy schemes: